25 minutes, 5238 Words

2021-05-13 11:03

NYC Taxi Fare Prediction - Kaggle Competition

The New York City Taxi Fare Prediction Competition was a Kaggle competition ran back in 2018, 3 years from the time of writing this post. As such, the Kaggle competition is closed for submissions that count towards the leaderboard, but I really enjoy looking at this competition as an introduction to Kaggle and machine learning competitions in general. The dataset is very approachable for a beginner with a small number of features, however it has a large number of observations (individual taxi rides) which introduces new competitors to challenges involving time complexity and how much data should actually be loaded.

In this post I am going to run through one possible submission for this competition, explaining each step in a way that hopefully introduces readers to the world of machine learning competitions and each step in the process building up to a final submission.

The full code for this is on github provided in both a notebook and flat python file form. You can also find it as a notebook on Kaggle if you want to edit and run it quickly.

Part 1 - Examine and clean the data

The competition provides us with three files - train.csv, test.csv, and sample_submission.csv. The final sample submission file simply shows us the format to structure our final submission in and is not so important to understand initially.

# Import the packages that we will be using

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import geopandas as gpd

import optuna

import xgboost as xgb

from sklearn.metrics import mean_squared_error

from sklearn.model_selection import train_test_split

# Load in our data

train = pd.read_csv("data/input/train.csv", nrows=2000000)

test = pd.read_csv("data/input/test.csv")

- train.csv has a shape of (55423856, 8) meaning we have roughly 55.4 million observations (individual taxi fares) and 8 features for each of those observations. Our features are columns, while our observations are rows. This is a lot of individual fares! We’ve loaded in the first 2 million rows.

- test.csv is significantly smaller with a shape of (9914, 7). Note we have one less feature (column) than the train data - this is because fare_amount is removed from this data as it’s the data we want to predict the fare for!

Let’s look at the features of this data. As mentioned there are 8 in train.csv. These features are:

- key - the key represents the pickup date and time in local time (NYC)

- fare_amount - a USD value to two decimal places representing the cost of the trip, tolls included

- pickup_datetime - the pickup date and time for the specific trip in UTC format

- pickup_longitude - the pickup longitude position, presumably from an on-board GPS

- pickup_latitude - the pickup latitude position

- dropoff_longitude - the dropoff longitude position

- dropoff_latitude - the dropoff latitude position

- passenger_count - an integer value representing the number of passengers in the taxi

One of the great things about having over 55.4 million observations is that we can be quite aggressive in how we deal with missing/nonsensical data. One of the big challenges in dealing with missing data can be deciding whether we drop it completely or interpolate the missing values using a method such as mean imputation. With so many observations it is unlikely that simply dropping this data is going to have any effect our model at all. Before we look at missing values in this data, let’s generate some summary statistics for the values in the columns above using pandas.

# Let's take a quick initial look at an overview of our train data

train.describe()

This gives us a nice table which essentially generates some simple summary statistics for us to look over. If there is one thing that I want to really have sink in as you read this, it’s that the best way to do EDA (and honestly, the best way to critically analyse results in general), at least initially, is by doing a sanity check. On an approachable dataset like this, we can straight away identify a lot of issues simply from the summary table above.

Taking the very first column in the table, we can see that it describes the fare_amount for each observation. The maximum value across the data we have loaded is 1273.31, and the minimum value is -62. From a sanity point of view, does this make any sense? Perhaps a maximum fare of USD$1200 is possible for an extremely long trip, and this particular data point could be cross-referenced with the other features to confirm whether it is legitimate or not, but it’s unlikely to be legitimate, and looking further at this point, the distance of the trip was 0km (more on how we calculate this later), so it is not a legitimate observation. How about a negative taxi fare? Clearly it makes no sense, so we definitely want to put some constraints on the fare.

We also want to have a quick initial look at the passenger_count column. Again, this should be very intuitive and should be easy with a simple sanity check. The minimum value is zero, and the maximum is 208. I’m not sure what sort of taxis exist in NYC, but I’m betting there isn’t one that holds 208 passengers! The mean is 1.6 passengers per trip which makes a lot more sense. When cleaning this column we want to exclude all with a passenger_count of zero as it doesn’t make much sense to be charging for a trip with no passengers, and we’ll put a generous upper limit on the amount of passengers as well at 6.

# Perform cleaning here, drop NaN rows (rows with empty cells)

train.dropna(inplace=True)

# Drop the <=0 fares from the dataset, keeping only fares >0

train = train[train["fare_amount"] > 0]

# Now we want to look at the passenger count. If there is zero passengers, drop the observation

train = train[train["passenger_count"] > 0]

# If there is more than 6 passengers, also drop the observation

train = train[train["passenger_count"] <= 6]

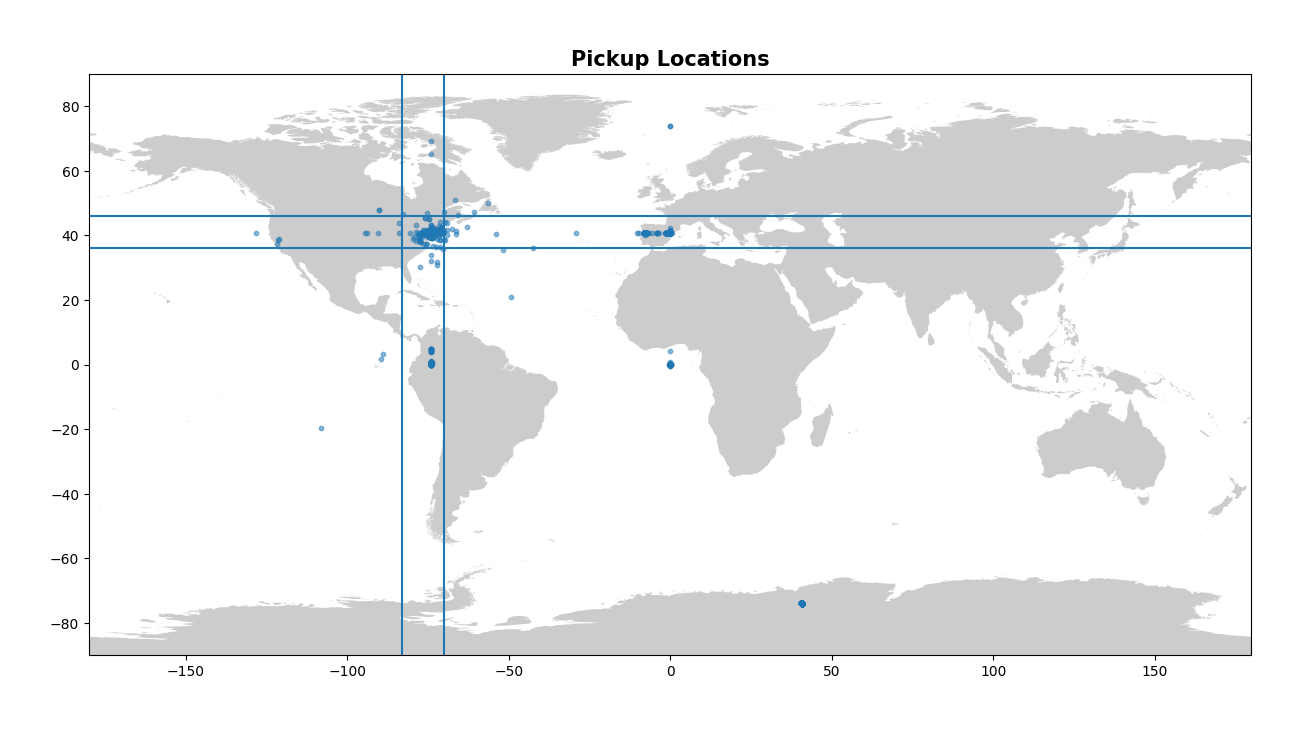

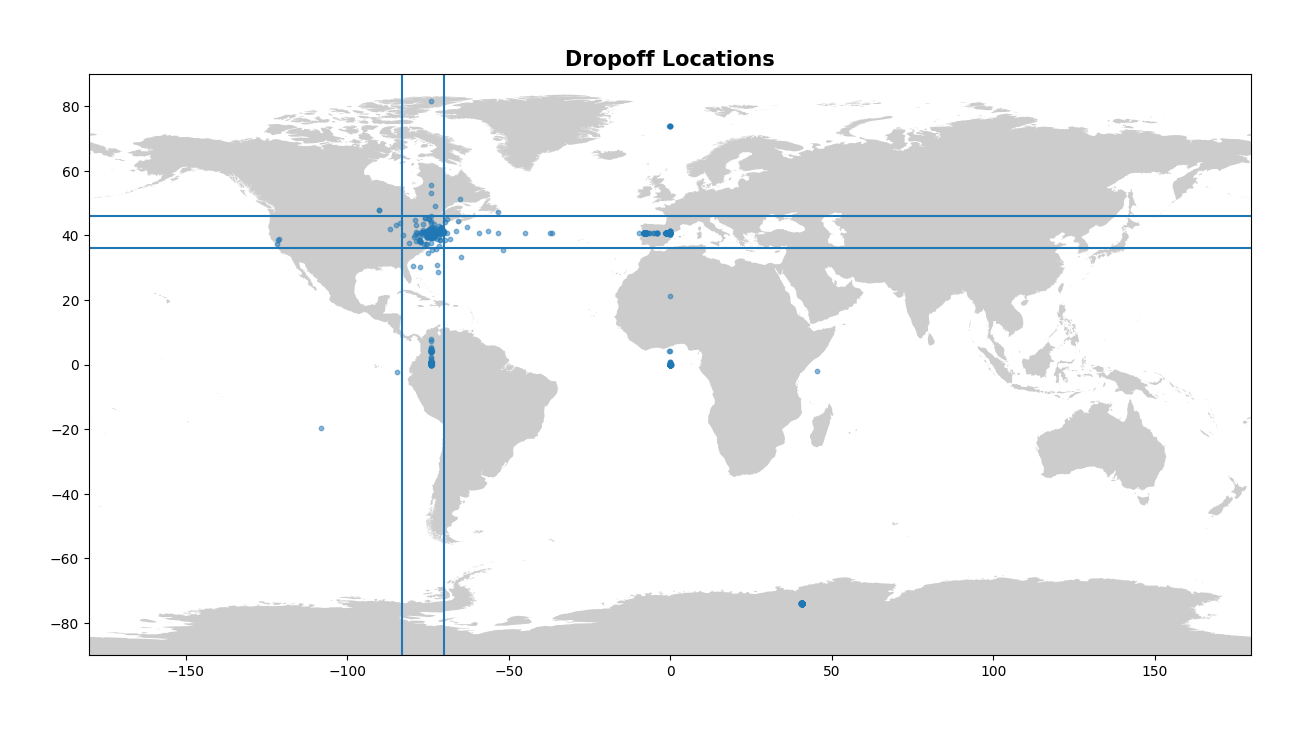

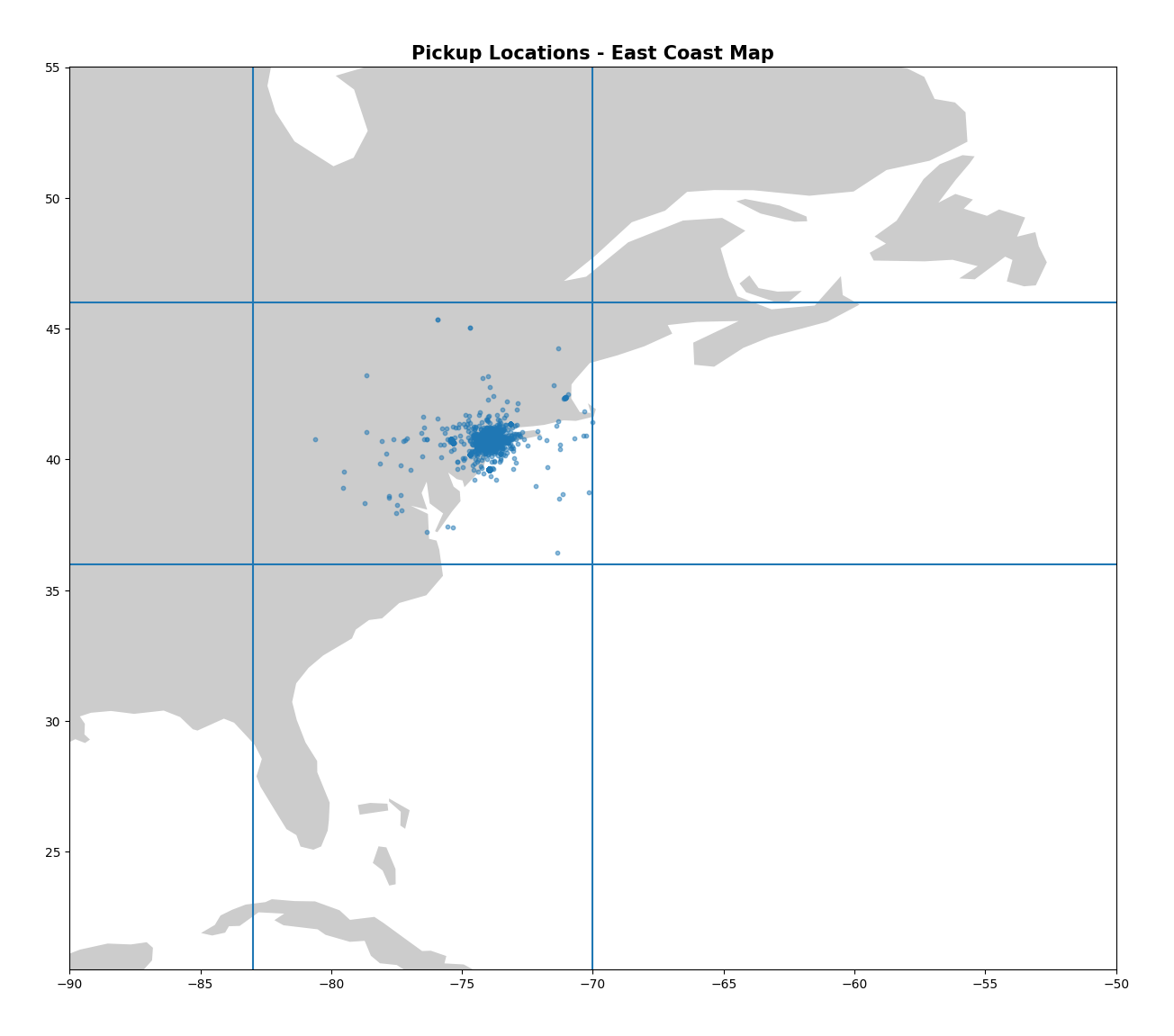

The next columns to inspect in the table describe the longitude and latitude for both pickup and dropoff. Now remember that this is a dataset for New York City taxis, so we want either the pickup or the dropoff location to be located within NYC, or at the very least NY state. A useful way to look for values that make no sense with geographic data is to plot them, so let’s plot both the pickup and dropoff locations on a map. Note that when we create these plots we are using a local shapefile for the map and we use the package GeoPandas to create a dataframe containing our points. There are many ways to visualise this and this is only one way - I would encourage the learner to explore both ends of the spectrum, from simple plots using matplotlib to complex interactive plots using plotly! I’ve also drawn four straight lines on each plot to very roughly represent a bounding box in which all our points should be contained.

street_map = gpd.read_file("shapefiles/worldmap/WB_Land_10m.shp")

# Create a dataframe using Geopandas to hold the pickup longitude and latitude values from the train dataset

geo_df = gpd.GeoDataFrame(train, geometry=gpd.points_from_xy(x=train["pickup_longitude"], y=train["pickup_latitude"]))

# Now we create our figure and axes and assign these to out plot

fig, ax = plt.subplots(figsize=(15, 15))

street_map.plot(ax=ax, alpha=0.4, color="grey")

geo_df.plot(ax=ax, alpha=0.5, legend=True, markersize=10)

# Give our plot a title

plt.title("Pickup Locations", fontsize=15, fontweight="bold")

# Set latitude and longitude boundaries for the large overview of the world map

plt.xlim(-180, 180)

plt.ylim(-90, 90)

# These lines very roughly represent where our cutoff point should be for NY state

plt.axvline(x=-70)

plt.axvline(x=-83)

plt.axhline(y=36)

plt.axhline(y=46)

plt.show()

# Create a dataframe using Geopandas to hold the dropoff longitude and latitude values from the train dataset

geo_df = gpd.GeoDataFrame(train, geometry=gpd.points_from_xy(x=train["dropoff_longitude"], y=train["dropoff_latitude"]))

# Now we create our figure and axes and assign these to out plot

fig, ax = plt.subplots(figsize=(15, 15))

street_map.plot(ax=ax, alpha=0.4, color="grey")

geo_df.plot(ax=ax, alpha=0.5, legend=True, markersize=10)

# Give our plot a title

plt.title("Dropoff Locations", fontsize=15, fontweight="bold")

# Set latitude and longitude boundaries for the large overview of the world map

plt.xlim(-180, 180)

plt.ylim(-90, 90)

# These lines very roughly represent where our cutoff point should be for NY state

plt.axvline(x=-70)

plt.axvline(x=-83)

plt.axhline(y=36)

plt.axhline(y=46)

plt.show()

Now this is a nice visual look at our pickup and dropoff data. It makes it abundantly clear that there is some issues! There’s points all over the globe, from Antarctica to France, to a clustering at Null Island (0, 0). Constraining the latitude and longitude to drop all values outside of NY will be important in cleaning up our data here. Let’s clean up the longitude and latitude values now.

Here we simply drop all values that have a longitude and latitude outside the bounds that we define. Note that this isn’t perfect as you will see in the later images, but it removes the majority of the outliers.

# Drop any records that have zero longitude/latitude, or long/lats that are outside bounds. Rembmer our longitude for NYC is always negative.

# This is going to be a rough chop, there is a significant amount of outliers to discuss

train = train[(train["pickup_longitude"] < -70) & (train["pickup_longitude"] > -83)]

train = train[(train["pickup_latitude"] > 36) & (train["pickup_latitude"] < 46 )]

train = train[(train["dropoff_longitude"] < -70) & (train["dropoff_longitude"] > -83)]

train = train[(train["dropoff_latitude"] > 36) & (train["dropoff_latitude"] < 46 )]

Let’s quickly confirm that the minimum and maximum latitude and longitude now makes sense for both the pickup and dropoff columns.

# Confirm that all our longitude and latitude values now fall within acceptable bounds

train.describe()

We can also confirm that we’ve dropped the NaN values by repeating the previous line:

print("NaN values:")

print(format(train.isna().sum()))

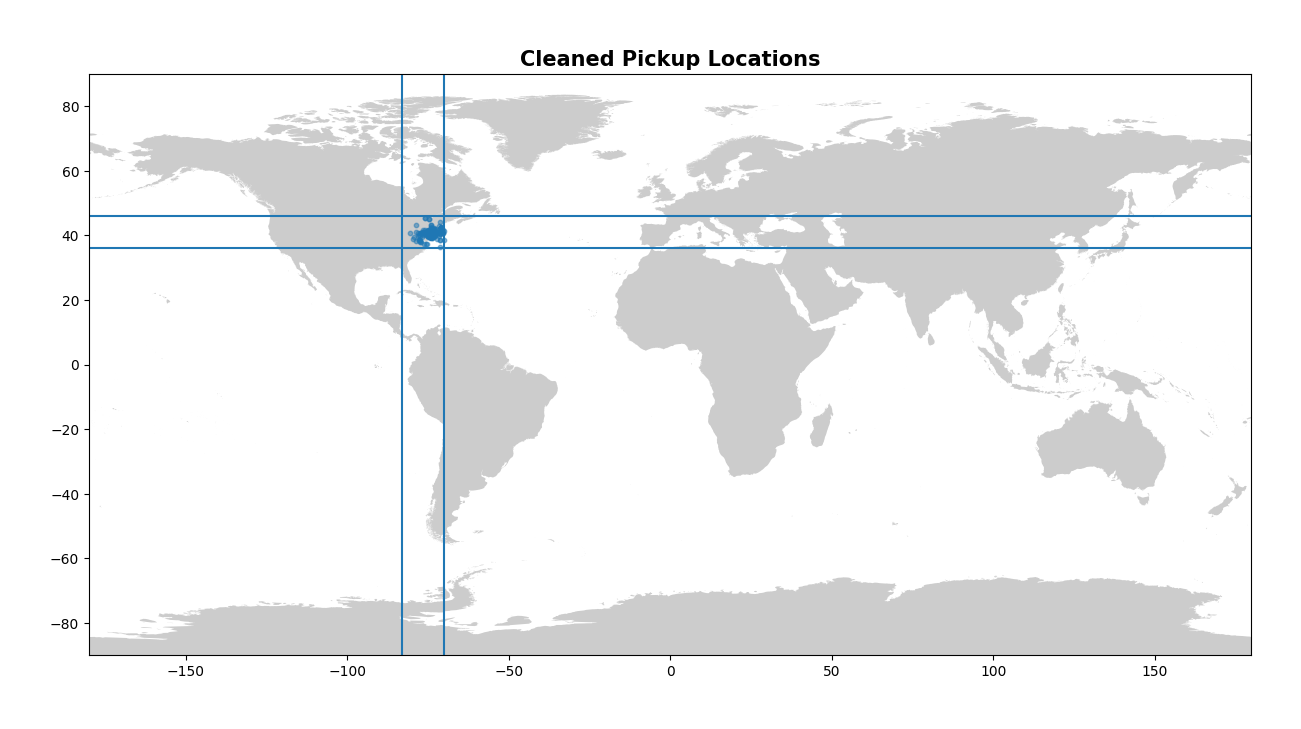

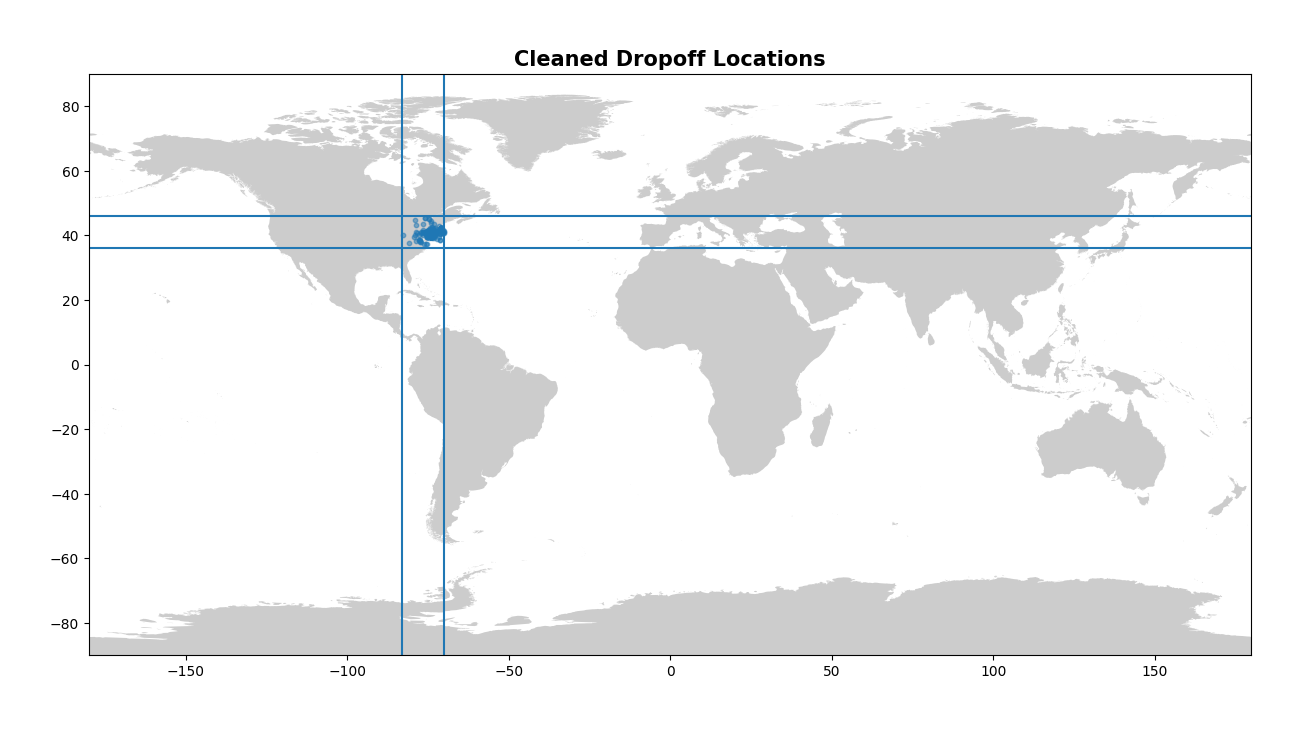

Great, there’s no NaN values in our data and the summary statistics look a lot better. Before looking at our cleaned visualisations of the pickup and dropoff location data, we can take a look at the final shape of our train data after cleaning.

# See the new shape of our data after dropping the outlier coordinates

print("Train data shape: ", train.shape)

From the initial 2 million observations that we loaded from the train data, we now have 1.95 million. This means we’ve dropped around 50,000 observations or only 2.5% of our total - not bad at all. This supports what we mentioned earlier about being able to be aggressive in the way that we drop data - we aren’t having any significant impact on our model quality by doing so, at least not to the level that we would be with a far smaller dataset.

Now let’s look at our latitude/longitude values. Again, visually is the best way to do this, and so we can create the same plots as last time and confirm that we’ve indeed dropped our outliers.

# Let us now visualise pickup and dropoff coordinates on two seperate maps so we can confirm that there are no outliers in our coordinates

geo_df = gpd.GeoDataFrame(train, geometry=gpd.points_from_xy(x=train["pickup_longitude"], y=train["pickup_latitude"]))

fig, ax = plt.subplots(figsize=(15, 15))

street_map.plot(ax=ax, alpha=0.4, color="grey")

geo_df.plot(ax=ax, alpha=0.5, legend=True, markersize=10)

plt.title("Cleaned Pickup Locations", fontsize=15, fontweight="bold")

# Set latitude and longitude boundaries

plt.xlim(-180, 180)

plt.ylim(-90, 90)

plt.axvline(x=-70)

plt.axvline(x=-83)

plt.axhline(y=36)

plt.axhline(y=46)

plt.show()

geo_df = gpd.GeoDataFrame(train, geometry=gpd.points_from_xy(x=train["dropoff_longitude"], y=train["dropoff_latitude"]))

fig, ax = plt.subplots(figsize=(15, 15))

street_map.plot(ax=ax, alpha=0.4, color="grey")

geo_df.plot(ax=ax, alpha=0.5, legend=True, markersize=10)

plt.title("Cleaned Dropoff Locations", fontsize=15, fontweight="bold")

# Set latitude and longitude boundaries

plt.xlim(-180, 180)

plt.ylim(-90, 90)

plt.axvline(x=-70)

plt.axvline(x=-83)

plt.axhline(y=36)

plt.axhline(y=46)

plt.show()

street_map = gpd.read_file("../input/eastus/ne_110m_land.shp")

# Create a dataframe using Geopandas to hold the pickup longitude and latitude values from the train dataset

geo_df = gpd.GeoDataFrame(train, geometry=gpd.points_from_xy(x=train["pickup_longitude"], y=train["pickup_latitude"]))

# Now we create our figure and axes and assign these to out plot

fig, ax = plt.subplots(figsize=(15, 15))

street_map.plot(ax=ax, alpha=0.4, color="grey")

geo_df.plot(ax=ax, alpha=0.5, legend=True, markersize=10)

# Give our plot a title

plt.title("Pickup Locations - East Coast Map", fontsize=15, fontweight="bold")

# Set latitude and longitude boundaries for the large overview of the world map

plt.xlim(-90, -50)

plt.ylim(20.496103, 55.01585)

# These lines very roughly represent where our cutoff point should be for NY state

plt.axvline(x=-70)

plt.axvline(x=-83)

plt.axhline(y=36)

plt.axhline(y=46)

plt.show()

street_map = gpd.read_file("../input/nycity/geo_export_21a50042-3764-4df5-b711-bf69e0736421.shp")

# Now we create our figure and axes and assign these to out plot

fig, ax = plt.subplots(figsize=(15, 15))

street_map.plot(ax=ax, alpha=0.4, color="grey")

geo_df.plot(ax=ax, alpha=0.5, legend=True, markersize=10)

# Give our plot a title

plt.title("Pickup Locations - NYC Map", fontsize=15, fontweight="bold")

# Set latitude and longitude boundaries for the

plt.xlim(-74.02, -73.925)

plt.ylim(40.7, 40.8)

plt.show()

These plots look much better! I enjoy looking at the final map of NYC as well - you can clearly see the shape of the city and bridges mapped out by the numerous pickups!

Now we move on to one of the columns that we haven’t looked at much yet - the pickup_datetime column. As we explained earlier it contains the date and time of the fare. However to get this into a format that Python understands we need to use the to_datetime call in Pandas. This is simple to do and we will do this for both our train and test data. This is preparing the datetime data for our next step - feature selection!

# Convert pickup date to datetime object

train['pickup_datetime'] = pd.to_datetime(train['pickup_datetime'], format="%Y-%m-%d %H:%M:%S UTC")

test['pickup_datetime'] = pd.to_datetime(test['pickup_datetime'], format="%Y-%m-%d %H:%M:%S UTC")

print("Datetime object created.")

Part 2 - New Features

Now we’ve cleaned up the existing features we can look at creating new features. I keep referring back to this, but when creating new features it’s great to do a sanity check. Given some common knowledge about a taxi business, what might be interesting as a feature that could improve our model? How about the day of the week? The hour at which you order a taxi? Do you think the fare would change ordering a taxi at 4pm as opposed to ordering one at 4am? In total we’re going to add six new features here, all from the datetime column that we previously worked with. These six features will be the hour, the day of the week, the day of the month, the week of the year, the month of the year, and the year.

# Create an hour feature

train['hour'] = train['pickup_datetime'].dt.hour

test['hour'] = test['pickup_datetime'].dt.hour

print("Hour created.")

# Create a day of week feature, map days from 0 to 6

train['dayofweek'] = train['pickup_datetime'].dt.dayofweek

test['dayofweek'] = test['pickup_datetime'].dt.dayofweek

print("DayOfWeek column created.")

# Create a day of the month feature

train['dayofmonth'] = train.pickup_datetime.dt.day

test['dayofmonth'] = test.pickup_datetime.dt.day

# Create a week of the year feature

train['week'] = train.pickup_datetime.dt.week

test['week'] = test.pickup_datetime.dt.week

# Create a month feature

train['month'] = train.pickup_datetime.dt.month

test['month'] = test.pickup_datetime.dt.month

# Create a year feature

train['year'] = train.pickup_datetime.dt.year

test['year'] = test.pickup_datetime.dt.year

Great, we’ve created six new features based on our datetime object. Thinking again for new features - how about the distance of the trip? It’s one of the most defining factors in determining the price for a taxi trip, and anyone who has sat in a taxi has seen the meter keep ticking over the further the trip goes on. We’ve got the pickup and dropoff coordinates, but we don’t have an actual distance. Luckily, we can use the great circle distance (the distance between two points on the surface of the Earth) to calculate this. This is also referred to as the haversine distance.

Now we simply define the haversine function and then call it for both our train and test sets. Now we have a new feature - distance_km!

# Define the Haversine distance formula to calculate the distance between two points on the Earth

def haversine_distance(lat1, lon1, lat2, lon2):

r = 6371 # Radius of the earth in km

phi1 = np.radians(lat1)

phi2 = np.radians(lat2)

delta_phi = np.radians(lat2 - lat1)

delta_lambda = np.radians(lon2 - lon1)

a = np.sin(delta_phi / 2)**2 + np.cos(phi1) * np.cos(phi2) * np.sin(delta_lambda / 2)**2

res = r * (2 * np.arctan2(np.sqrt(a), np.sqrt(1 - a)))

return np.round(res, 2)

# Calculate the ride distance and add it as a feature to our train and test sets

train['distance_km'] = haversine_distance(train["pickup_latitude"], train["pickup_longitude"], train["dropoff_latitude"], train["dropoff_longitude"])

test['distance_km'] = haversine_distance(test["pickup_latitude"], test["pickup_longitude"], test["dropoff_latitude"], test["dropoff_longitude"])

What other features can we look at adding? Well, we’ve added the distance from point A to point B of a trip. However, what if some trips have a fixed price? One of the common areas for taxi trips is to and from the airport, and New York has three major airports - JFK, EWR, and LGA. We can add new features to calculate the pickup and dropoff distance from all three major airports in the NY area.

def from_airport_distance(dataset):

# Define the coordinates for each airport

jfk_coords = (40.639722, -73.778889)

ewr_coords = (40.6925, -74.168611)

lga_coords = (40.77725, -73.872611)

dataset['pickup_jfk_distance'] = haversine_distance(jfk_coords[0], jfk_coords[1], dataset.pickup_latitude, dataset.pickup_longitude)

dataset['dropof_jfk_distance'] = haversine_distance(jfk_coords[0], jfk_coords[1], dataset.dropoff_latitude, dataset.dropoff_longitude)

dataset['pickup_ewr_distance'] = haversine_distance(ewr_coords[0], ewr_coords[1], dataset.pickup_latitude, dataset.pickup_longitude)

dataset['dropof_ewr_distance'] = haversine_distance(ewr_coords[0], ewr_coords[1], dataset.dropoff_latitude, dataset.dropoff_longitude)

dataset['pickup_lga_distance'] = haversine_distance(lga_coords[0], lga_coords[1], dataset.pickup_latitude, dataset.pickup_longitude)

dataset['dropof_lga_distance'] = haversine_distance(lga_coords[0], lga_coords[1], dataset.dropoff_latitude, dataset.dropoff_longitude)

return dataset

train = from_airport_distance(train)

test = from_airport_distance(test)

The final change we will make is converting the original four pickup and dropoff coordinate columns from degrees to radians. Why do we do this? There’s a great article here explaining why, the summary of which is that radians make far more sense than degrees for a model to interpret.

Now we’ve created all these new features, let’s take a look at them visually and see what they can tell us about our data.

First, let’s take a look at the distance of our trip and see how the fare amount varies with increasing distance.

# How does the distance affect the fare price?

plt.figure(figsize=(12, 8))

sns.scatterplot(data=train, x="fare_amount", y="distance_km", marker=".", alpha=0.6)

plt.xlabel('Fare amount')

plt.ylabel('Distance, km')

plt.xlim(0, 500)

plt.ylim(0, 500)

plt.title('Fare amount based on the distance')

plt.show()

This plot is very interesting. We can see a very high concentration of trips between 0 and 40km in distance, and the fare for these trips seems to follow a relatively linear relationship - as the distance increases, so does the fare. However, this isn’t the case for every trip visualised on this plot.

Something that could potentially improve this model is dropping trips above a certain distance. A trip above even 100km in a taxi would be incredibly rare in practice, and the fares certainly don’t make a lot of sense for these trips. As an example, on this plot, at the 150km distance, most of these trips cost less than $50! This isn’t realistic and really fails a logical check, so we could potentially improve our model by dropping these odd trips from our train data.

Next let’s look at the passenger count and how it affects the price, as well as the distribution of the passenger count in each trip.

# How does the passenger count affect the fare price?

plt.figure(figsize=(12, 8))

sns.scatterplot(data=train, x="passenger_count", y="fare_amount", s=10)

plt.xlabel('Number of Passengers')

plt.ylabel('Fare')

plt.show()

# How many passengers are in each trip?

plt.figure(figsize=(12, 8))

sns.countplot(data=train, x="passenger_count", color=(89/255, 117/255, 164/255, 1))

plt.xlabel('Number of Passengers')

plt.ylabel('Observations')

plt.yticks([200000, 400000, 600000, 800000, 1000000, 1200000, 1400000], ["0.2M", "0.4M", "0.6M", "0.8M", "1M", "1.2M", "1.4M"])

plt.show()

We can see there isn’t a significant relationship between passenger count and price. This is further apparent when we look at the distribution of passengers across all trips - the vast majority of trips have only one passenger on board.

We will now look at the hour of each trip and how this affects the fare price.

# How does the hour affect the fare price?

hour_price = train.groupby('hour', as_index=False)['fare_amount'].median()

# Generate a line plot of the median fare for each hour

plt.figure(figsize=(12, 8))

sns.lineplot(data=hour_price, x="hour", y="fare_amount", marker="o")

plt.xlabel('Hour of the day')

plt.ylabel('Median fare amount')

plt.title('Fare amount based on day time')

plt.xticks(range(24))

plt.show()

# Let's also look at amount of trips by hour

plt.figure(figsize=(12, 8))

sns.countplot(data=train, x="hour", color=(89/255, 117/255, 164/255, 1))

plt.xlabel('Hour')

plt.ylabel('Observations')

plt.show()

We can see the highest median fare price comes in at 4am, and this makes a lot of sense. At such an early hour there is unlikely to be many customers looking for a taxi, and as such fares are increased. As we move into peak hours and during the day, the median fare price drops dramatically. It is between the hours of 10pm and 5am that the median fare price is higher.

This assumption is supported by our bar plot as well. The amount of trips is at a minimum at 5am, and starts to drop off at around 10pm. Less trips in the evening and early hours of the morning, leading to higher fares during those hours. Interesting to note is that the busiest hour is 7pm, likely a combination of some people returning home from work, and others on their way out for dinner.

We’ll now look at the day of the week and how this affects the fare price, as well as the number of trips per day.

# How does the day of the week affect the fare price?

# Note that Monday is 0 and Sunday is 6 before labels are changed

day_price = train.groupby('dayofweek', as_index=False)['fare_amount'].median()

days = ["Monday", "Tuesday", "Wednesday", "Thursday", "Friday", "Saturday", "Sunday"]

plt.figure(figsize=(12, 8))

sns.lineplot(data=day_price, x="dayofweek", y="fare_amount", marker="o")

plt.xlabel('Day of Week')

plt.ylabel('Median fare amount')

plt.title('Fare amount based on day of the week')

plt.xticks([0, 1, 2, 3, 4, 5, 6], days)

plt.show()

# How many trips are in each day?

plt.figure(figsize=(12, 8))

sns.countplot(data=train, x="dayofweek", color=(89/255, 117/255, 164/255, 1))

plt.xlabel('Day of Week')

plt.ylabel('Observations')

plt.xticks([0, 1, 2, 3, 4, 5, 6], days)

plt.show()

It’s interesting to see that there isn’t much of a relationship here. The fare amount remains constant except on a Monday, where the median fare is 40 cents lower than the rest of the days. The distribution of trips per day of the week doesn’t show any significant variation either - Monday has the lowest amount of trips, but not by a significant margin. Friday has the most trips which makes some sense, a combination of people still going to and from work (unlike on weekends), but also has the added spike of trips for people going out for dinner on the Friday evening.

We can now look at the week of the year and see how this might affect our fare price.

# How does the week of the year affect the price?

week_price = train.groupby('week', as_index=False)['fare_amount'].median()

plt.figure(figsize=(12, 8))

sns.lineplot(data=week_price, x="week", y="fare_amount", marker="o")

plt.xlabel('Week')

plt.ylabel('Median fare amount')

plt.xticks(range(1, 54))

plt.title('Fare amount based on the week')

plt.show()

# What is the frequency of trips in each week of the year?

plt.figure(figsize=(12, 8))

sns.countplot(data=train, x="week", color=(89/255, 117/255, 164/255, 1))

plt.xlabel('Week')

plt.ylabel('Observations')

plt.show()

The first thing you might notice here is that there’s 53 weeks on these figures! This is because the year 2012 was a year with 53 weeks, a phenomenon that occurs every 5-6 years. Our data includes the years 2009-2015.

It is interesting to note that the number of trips is significantly higher in the first 26 weeks of the year, at which point we see a significant decline in week 27, and then a steady trend for the remainder of the year. The number of trips also drops off significantly in the final weeks of the year (51 and 52), likely due to Christmas and New Year celebrations. There isn’t any other significant variation in the number of trips per week except in week 53, as there is only one week 53 in our data of 2009-2015.

We will now look at how the fare price changes through the months of the year.

# Get the median fare for each month

month_price = train.groupby('month', as_index=False)['fare_amount'].median()

# Plot the median fare amount each month in the year

plt.figure(figsize=(12, 8))

months = ["J", "F", "M", "A", "M", "J", "J", "A", "S", "O", "N", "D"]

sns.lineplot(data=month_price, x="month", y="fare_amount", marker="o")

plt.xlabel('Month')

plt.ylabel('Median fare amount')

plt.xticks(range(1, 13), months)

plt.title('Fare amount based on month of the year')

plt.show()

# How many trips are there each month?

plt.figure(figsize=(12, 8))

sns.countplot(data=train, x="month", color=(89/255, 117/255, 164/255, 1))

plt.xlabel("Month")

plt.ylabel("Observations")

plt.xticks(range(0, 12), months)

plt.show()

# How does the day of the month affect the price?

plt.figure(figsize=(15, 7))

sns.scatterplot(data=train, x="dayofmonth", y="fare_amount", s=10)

plt.xlabel('Date')

plt.ylabel('Fare Amount')

plt.show()

These plots are similar to our previous ones where we investigated the weeks of the year, but instead now we are looking at a different level of abstraction. The highest median fare amount occurs in December, and then a significant drop occurs to the lowest median fare amount in January. July and August also see a large dip in the median fare. When we look at our next plot of the number of trips, we can see the least amount of trips occur in July and August.

Other than these small variations there isn’t anything significant to point out one month being far busier than any other.

We will now move to the highest level of abstraction that we have - the year feature.

# Again we find the median fare price, but this time for each year

year_price = train.groupby('year', as_index=False)['fare_amount'].median()

# Now plot the median fare price for each year

plt.figure(figsize=(12, 8))

sns.lineplot(data=year_price, x="year", y="fare_amount", marker="o")

plt.xlabel('Year')

plt.ylabel('Median fare amount')

plt.title('Fare amount based on the year')

plt.show()

# Now plot the number of trips taken each year in our data

plt.figure(figsize=(12, 8))

sns.countplot(data=train, x="year", color=(89/255, 117/255, 164/255, 1))

plt.xlabel("Year")

plt.ylabel("Observations")

plt.title("Number of trips per Year")

plt.show()

It’s interesting to see that the median fare sees no change in 2009, 2010, and 2011, but in 2012 we see a relatively sharp increase of $0.75 in the median fare, and in 2013 we see an increase of $1.00. The median fare then remains the same through 2013, 2014, and 2015.

The number of trips per year doesn’t provide much insight into this, as it remains relatively steady with only mild fluctiations in the number of trips per year. The drop in 2015 is not important as we previously mentioned that this dataset simply doesn’t contain data for the entirety of 2015.

This is our visualisation complete. It’s always important to note that this is just a basic overview of each feature - you could go far further into depth with these, especially with the maps of NYC above. I would encourage any learners to take advantage of the ability to create interactive maps using Bokeh and other packages which can help visualise the trips in the state in an even better way.

In this section we introduced new features to our dataset which we are hoping will improve the accuracy of our model. Now it’s time to actually create our model and put that theory to the test.

Part 3 - Model Training

Now that we’ve done our preliminary data cleaning and feature selection, let’s look at creating multiple models that will allow us to predict the fare amount on our test data for the competition.

The first thing that I always like to do is look at the columns in the train and test data. We’ve added a number of new features to our data so it’s important to verify one last time that all the columns are as we expect before we begin to create a model.

# Take a look at our columns before we pass anything into our model to make sure we have the correct structure

print('Train columns:', train.columns.tolist())

print('\nTest columns:', test.columns.tolist())

# Remove the geometry column added for creating the plots earlier on the train data

train = train.drop("geometry", axis=1)

# Select only numeric features to use for our model training and predictions

# Note no key or pickup_datetime here

features = [

'pickup_longitude',

'pickup_latitude',

'dropoff_longitude',

'dropoff_latitude',

'distance_km',

'passenger_count',

'hour',

'dayofweek',

'dayofmonth',

'week',

'month',

'year',

'pickup_jfk_distance',

'dropof_jfk_distance',

'pickup_ewr_distance',

'dropof_ewr_distance',

'pickup_lga_distance',

'dropof_lga_distance']

We can see above that all the columns are correct. Note the absence of the fare amount column in the test data, as this is what we are trying to predict. Other than that, the datasets have the same columns. We’ve also defined the features we want to use in our model, ignoring the key and the fare amount in the train data.

For this notebook I’m only going to show one approach, using a package called Optuna.

Optuna is a package that will allow us to tune the hyperparameters of an XGBoost model by providing a set of hyperparameters to iterate through. It’s efficient and far faster than other methods such as GridSearchCV which I haven’t demonstrated here.

The first thing we do is define our objective function, specifying our train data and our train target. We then use train_test_split to split our train data into multiple sets, and then we define our hyperparameter grid, just as we would with any other hyperparameter searching package.

We then create an XGB Regression model which we will use to run through our hyperparameter grid.

# A list of hyperparameters available for tuning can be found at https://xgboost.readthedocs.io/en/latest/parameter.html

def objective(trial, data=train[features], target=train.fare_amount):

x_train, x_test, y_train, y_test = train_test_split(train[features], train.fare_amount, test_size=0.2, random_state=1)

param = {

'tree_method':'gpu_hist', # Use GPU acceleration

'lambda': trial.suggest_loguniform(

'lambda', 1e-3, 10.0

),

'alpha': trial.suggest_loguniform(

'alpha', 1e-3, 10.0

),

'colsample_bytree': trial.suggest_categorical(

'colsample_bytree', [0.5, 0.6, 0.7, 0.8, 0.9, 1.0]

),

'subsample': trial.suggest_categorical(

'subsample', [0.6, 0.7, 0.8, 1.0]

),

'learning_rate': trial.suggest_categorical(

'learning_rate', [0.008, 0.009, 0.01, 0.012, 0.014, 0.016, 0.018, 0.02]

),

'n_estimators': trial.suggest_categorical(

"n_estimators", [4000, 5000, 6000]

),

'max_depth': trial.suggest_categorical(

'max_depth', [7, 8, 10, 11, 12, 13, 14, 15, 16, 17, 18]

),

'random_state': 1,

'min_child_weight': trial.suggest_int(

'min_child_weight', 1, 300

),

}

model = xgb.XGBRegressor(**param)

model.fit(x_train, y_train, eval_set=[(x_test, y_test)], early_stopping_rounds=100, verbose=False)

predictions = model.predict(x_test)

rmse = mean_squared_error(y_test, predictions, squared=False)

return rmse

Now we create an Optuna study with 20 trials. We also generate some visualisations which will appear in the local notebook environment. To have these plots display in the browser instead (if running on a local jupyter kernel), add renderer=“browser” in the show method.

study = optuna.create_study(direction='minimize')

study.optimize(objective, n_trials=20)

print('Number of finished trials:', len(study.trials))

print('Best trial:', study.best_trial.params)

# Plot the optimisation history, showing the scores from all the trials

optuna.visualization.plot_optimization_history(study).show()

# Create a parallel coordinate plot

optuna.visualization.plot_parallel_coordinate(study).show()

# Plot the feature importance of each individual hyperparameter being tuned

optuna.visualization.plot_param_importances(study).show()

# Finally, plot the best parameters

study.best_params

# Now store the best hyperparameters that we obtained, as well as maintaining the random state and gpu acceleration for the final model

best_params = study.best_params

best_params['tree_method'] = 'gpu_hist'

best_params['random_state'] = 1

Now that we have obtained the best hyperparameters from our grid, we create a final XGB Regressor with these parameters and then fit the new model to our train data once again. We then make predictions on the provided test data and export these predictions as a CSV file.

clf = xgb.XGBRegressor(**(best_params))

clf.fit(train[features], train.fare_amount)

predictions = clf.predict(test[features])

# Confirm that our predictions are the same shape as the test data

print(predictions.shape)

print(test.shape)

holdout = pd.DataFrame({'key': test['key'], 'fare_amount': predictions})

holdout.to_csv('predictions.csv', index=False)

One final visualisation we generate is the feature importances for each feature in the model - note that this is different from the Optuna feature importance which is for the importance of each hyperparameter, not each feature in the model.

# Create a horizontal bar chart of the model feature importances, note that we have 18 features

feature_importances = pd.Series(clf.feature_importances_, index=train[features].columns)

feature_importances.nlargest(20).plot(kind='barh')

plt.show()

This simple submission using one model and hyperparameter tuning using Optuna gives a top 10% ranking in the competition. To further improve I would encourage the reader to think about the following topics:

- Data cleaning thoroughly, i.e. dropping outliers in a more accurate way than simply just chopping at a given latitude and longitude

- Further feature selection, either through dropping features that aren’t significant and creating further new features that may help model performance

- Multiple models (LGBM/RF are good starters), varied hyperparameter grids, and model ensembling

That’s everything for this notebook. I hope you’ve been able to learn something and if you have any feedback feel free to get in touch.

5238 Words

2021-05-13 11:03